About Me

Chang Xu is a Senior Researcher at Microsoft Research Asia, where she has been working in the Machine Learning Group since 2019. She received her Bachelor's degree from Nankai University in 2014 and completed her Ph.D. in 2019 through a joint program between Nankai University and Microsoft Research Asia.

Her research centers on sequential modeling, with a recent focus on time series analysis and generative modeling, especially in finance and healthcare domains. Her work has been published in leading venues such as IEEE Transactions on Computers, IEEE TKDE, ICLR, ICML, KDD, WWW, AAAI, IJCAI, ACM MM, ICME, and CIKM, accumulating over a thousand citations.

News

-

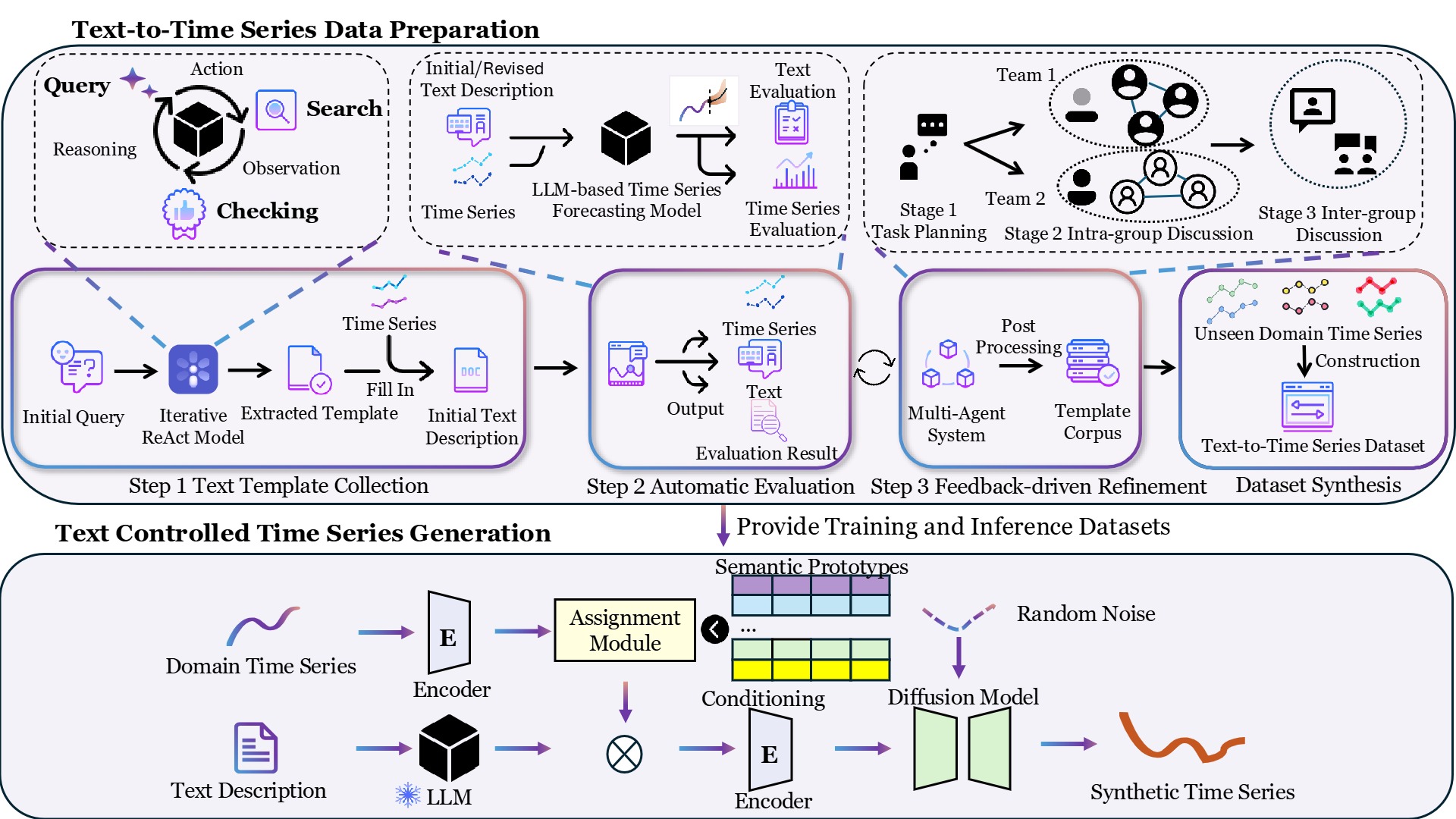

We are releasing TimeCraft, an innovative framework for synthetic time series generation!

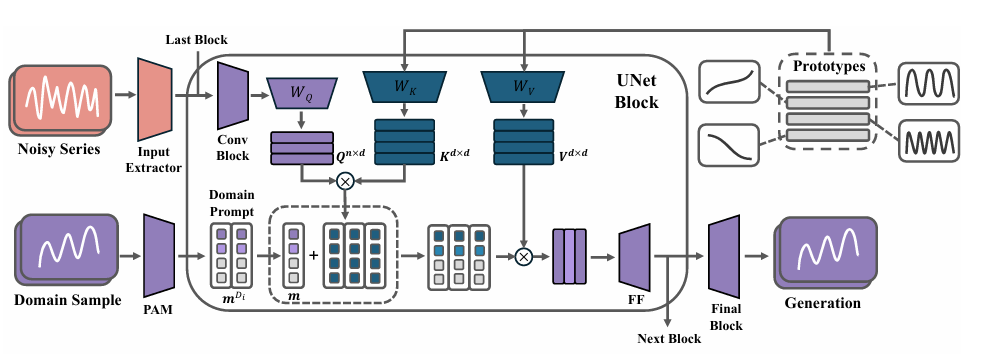

TimeCraft introduces a novel approach by learning a universal latent space of semantic prototypes for time series, enabling impressive cross-domain generalization, while leveraging a diffusion-based framework to ensure high-fidelity and diverse data generation.

Key technical highlights:- 🔹 Prototype Assignment Module (PAM): Adapts to new domains with few-shot examples.

- 🔹 Text-based Control: Leverage natural language to guide the generation process.

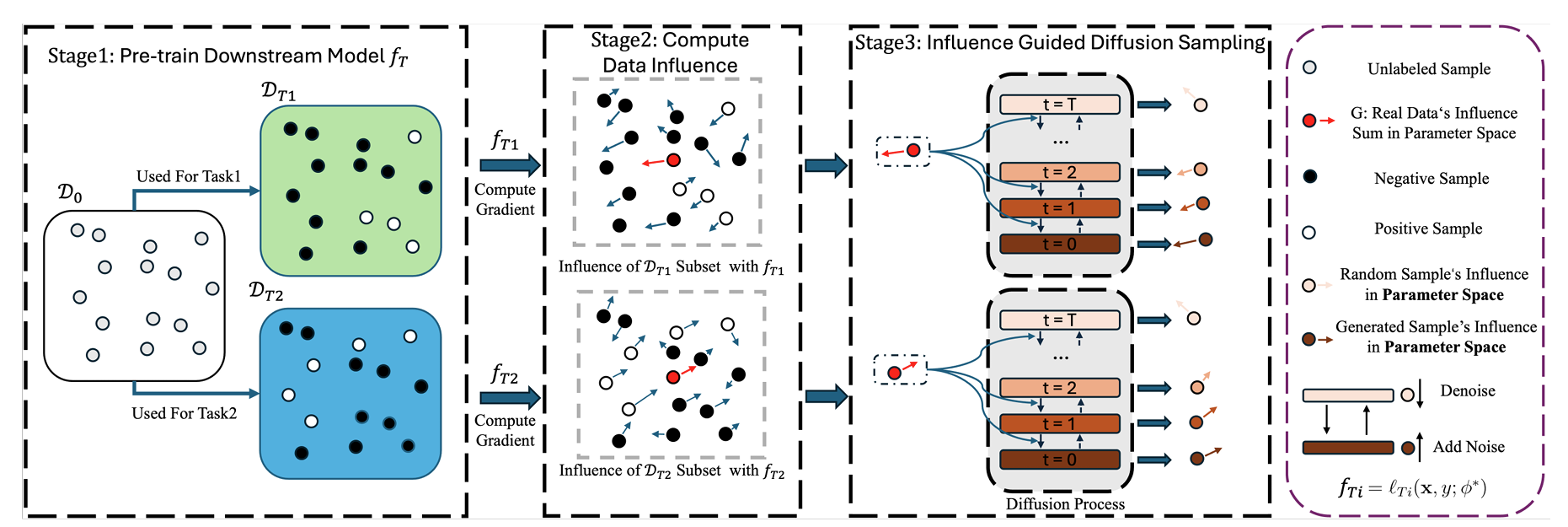

- 🔹 Influence-Guided Diffusion: Generates synthetic samples optimized for downstream task performance.

- Our papers TimeRAF: Retrieval-Augmented Foundation model for Zero-shot Time Series Forecasting has been accepted to TKDE !

- Our papers TarDiff: Target-Oriented Diffusion Guidance for Synthetic Electronic Health Record Time Series Generation and InvDiff: Invariant Guidance for Bias Mitigation in Diffusion Models have been accepted to KDD 2025 !

- Our paper BRIDGE: Bootstrapping Text to Control Time-Series Generation via Multi-Agent Iterative Optimization and Diffusion Modelling has been accepted to ICML 2025 !

- I will be attending WWW 2025 from 28 April to 2 May, in Sydney, Australia, and will give keynote talks at two workshops: (1) AI for Web-Centric Time Series Analysis and (2) Spatio-Temporal Data Mining from the Web!

- Our paper TimeDP: Learning to Generate Multi-Domain Time Series with Domain Prompts has been accepted to AAAI 2025 !

🔗 Explore the repository:

https://github.com/microsoft/TimeCraft

📄 中文宣传稿:

宣传稿链接

Intern Hiring

The MSRA Machine Learning Group is looking for research interns on Time Series Foundation Models, Generative Modeling & LLMs, and ML applications in Healthcare!- Interns will work on cutting-edge research with the goal of publishing in top-tier conferences/journals.

- Applicants should have a solid ML background, strong coding skills, and preferably prior research experience. Availability for at least 3 months onsite in Beijing is required.

- Please send your CV to chanx@microsoft.com with the subject line "Name + University + Grade" and include your available internship period in the email body.

Research Statement

Time-Series Analysis

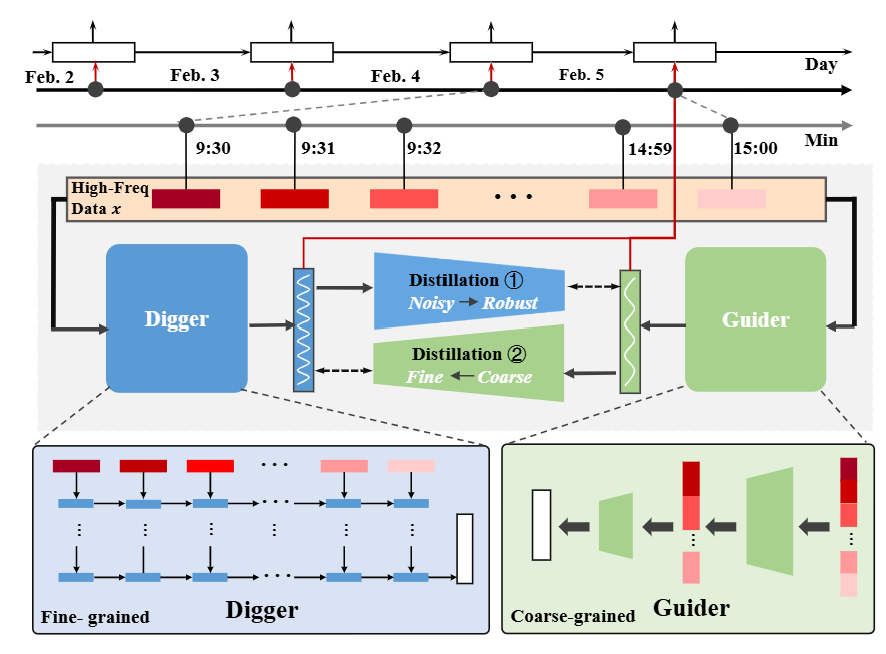

I investigate the integration of multiple levels of time-series data—both fine-grained (e.g., transaction-level or tick data) and coarse-grained (e.g., daily summaries or aggregated statistics). The challenge lies in effectively combining these different types of data to leverage their complementary strengths, and my work develops novel techniques to incorporate them into unified models, leading to improved performance and more robust predictions.

In addition to addressing granularity, I explore the power of cross-domain learning. Financial markets, healthcare, and other domains often share common underlying patterns, yet have domain-specific nuances. By capturing these general patterns and integrating relevant domain knowledge, I develop models that can transfer insights across domains. This approach enhances performance in data-scarce or noisy target domains and provides a way to apply learned knowledge from one area to another, creating more adaptable and versatile models.

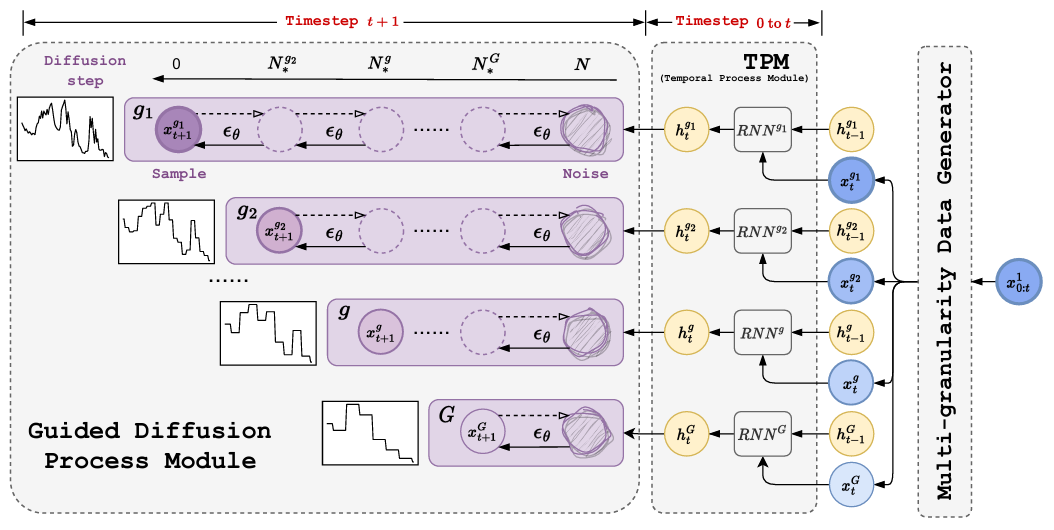

Beyond traditional predictive modeling, I place significant emphasis on time-series generation. Generative models play a crucial role in simulations, data augmentation, and scenario testing. My research focuses on generating time-series data that is not only realistic but also controllable and interactive. This means developing methods to produce data that can be tailored to specific domains and even adjusted based on particular requirements—be it for risk evaluation, testing, or forecasting with specific constraints.

Finally, I emphasize interpretability in time-series models, ensuring transparency in how predictions are made, which is crucial for trust and application in high-stakes domains.

AI in Finance

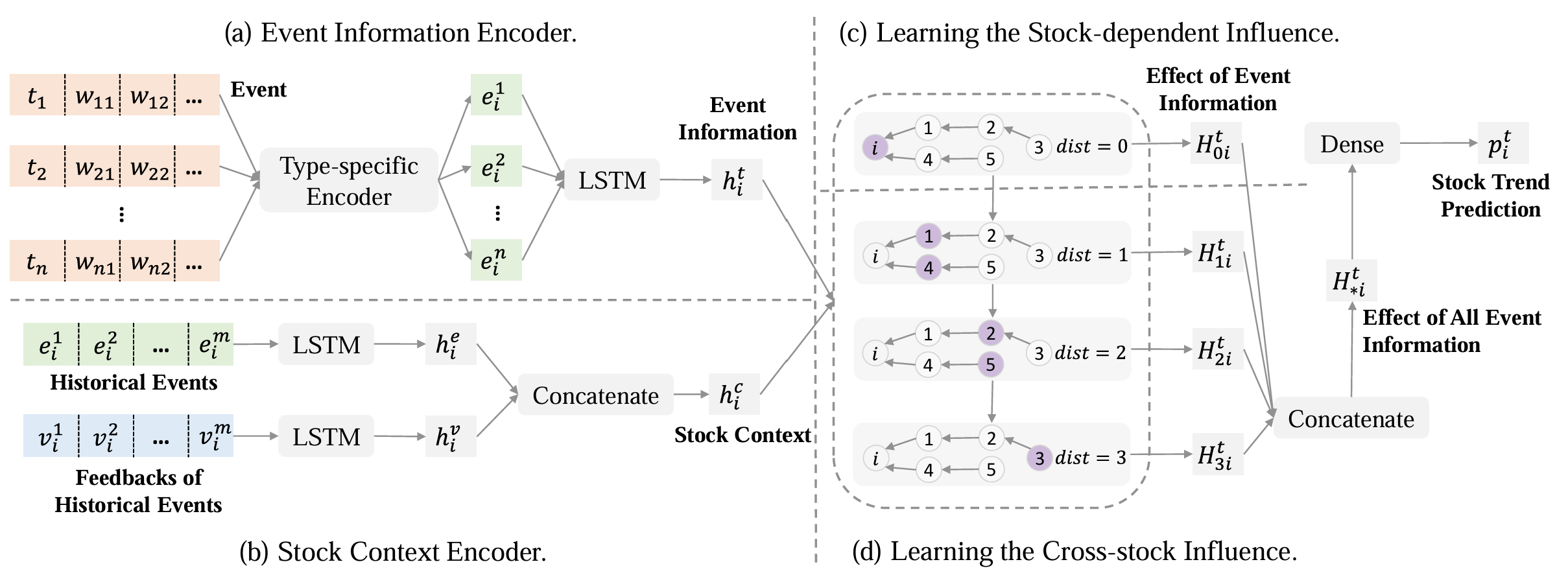

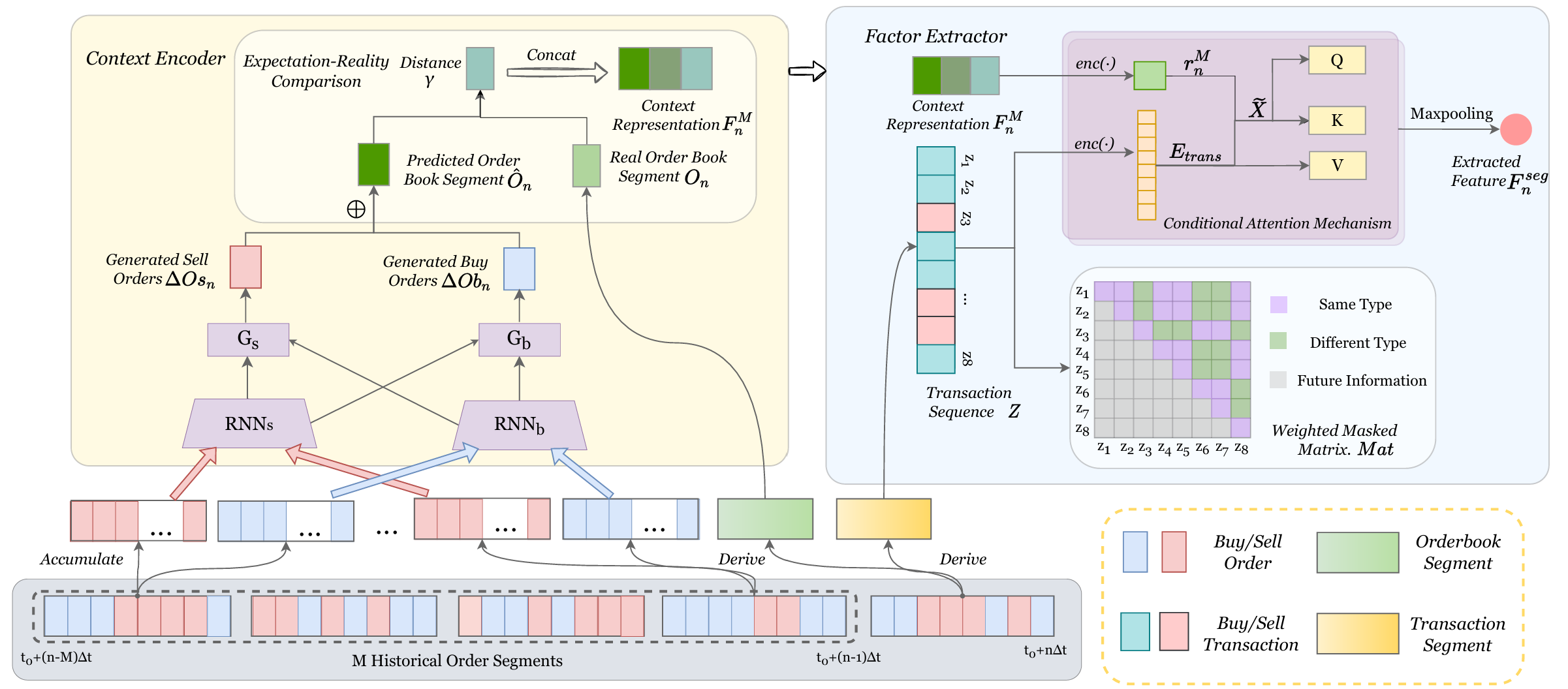

One of my core research areas is AI in finance, specifically in stock market modeling. The stock market presents a rich and challenging environment for machine learning, characterized by dynamic, high-dimensional, and sequential data. Modeling financial markets requires sophisticated techniques to capture temporal dependencies and fluctuations, which I address using sequential models such as Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and Transformers.

In the context of financial data, two primary types of time-series data are crucial:

- Price-Volume Data: This is coarse-grained data that captures overall market trends, including price movements and trading volumes. Predictive models built on this data are focused on identifying broader market trends and forecasting stock price fluctuations.

- Order Book Data: This is fine-grained data representing real-time order book dynamics, detailing the bid-ask spreads, order flows, and market depth at different price levels. Models that process order book data require a higher level of granularity and are particularly useful for analyzing market microstructure and short-term price movements.

In addition to time-series data, I also explore the integration of textual data such as financial news, reports, and social media sentiment. These data sources help uncover critical events and reveal the relationships between stocks, offering a richer context and more comprehensive predictions for market behavior. By incorporating these heterogeneous data sources, I aim to enhance the predictive power and robustness of financial models.

The primary tasks of my research in finance focus on:

- Prediction: Developing robust models that forecast stock price trends, market volatility, and financial risk based on historical and real-time data.

- Generation: Building generative models that simulate realistic financial scenarios, improving market simulations and decision-making in uncertain environments.

Through these efforts, my research seeks to bridge the gap between AI techniques and real-world financial applications, improving both predictive accuracy and model interpretability.

Research Works

Publications ( show selected / show all by date / show all by topic )

Topics: AI in Finance / Time-series Analysis / Generative Modeling / Sequential Modeling (* indicates equal contribution)

TarDiff: Target-Oriented Diffusion Guidance for Synthetic Electronic Health Record Time Series Generation

Bowen Deng, Chang Xu, Hao Li, Yu-Hao Huang, Min Hou, Jiang Bian

BRIDGE: Bootstrapping Text to Control Time-Series Generation via Multi-Agent Iterative Optimization and Diffusion Modelling

Hao Li, Yu-Hao Huang, Chang Xu, Viktor Schlegel, Renhe Jiang, Riza Batista-Navarro , Goran Nenadic, Jiang Bian

TimeDP: Learning to Generate Multi-Domain Time Series with Domain Prompts

Yu-Hao Huang, Chang Xu, Yueying Wu, Wu-Jun Li, Jiang Bian

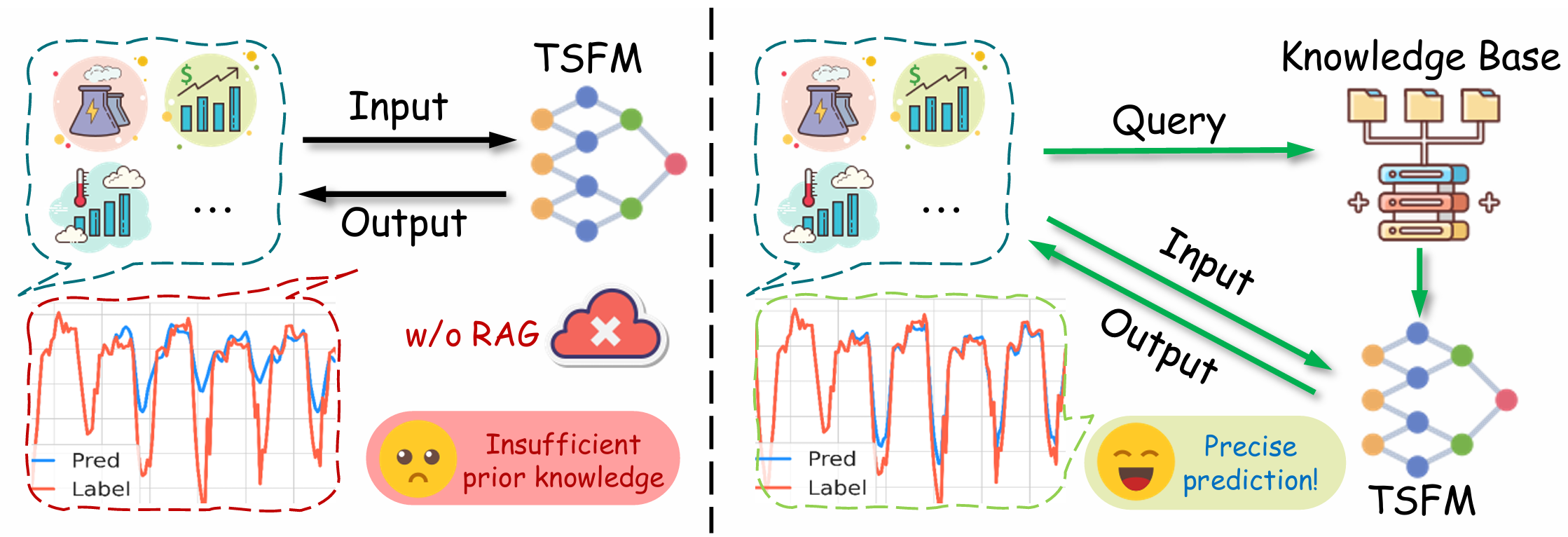

TimeRAF: Retrieval-Augmented Foundation model for Zero-shot Time Series Forecasting

Huanyu Zhang, Chang Xu, Yi-Fan Zhang, Zhang Zhang, Liang Wang, Jiang Bian, Tieniu Tan

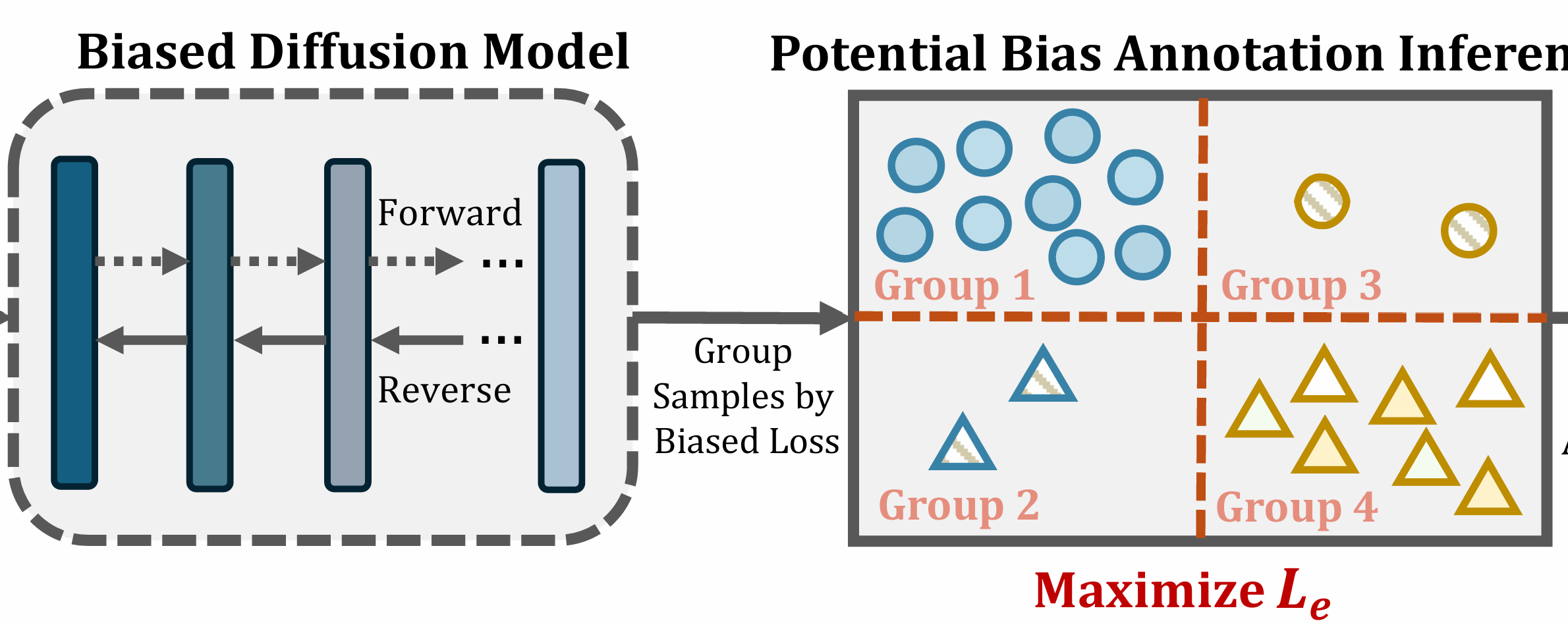

InvDiff: Invariant Guidance for Bias Mitigation in Diffusion Models

Min Hou*, Yueying Wu*, Chang Xu, Yu-Hao Huang, Xibai Chen, Le Wu, Jiang Bian

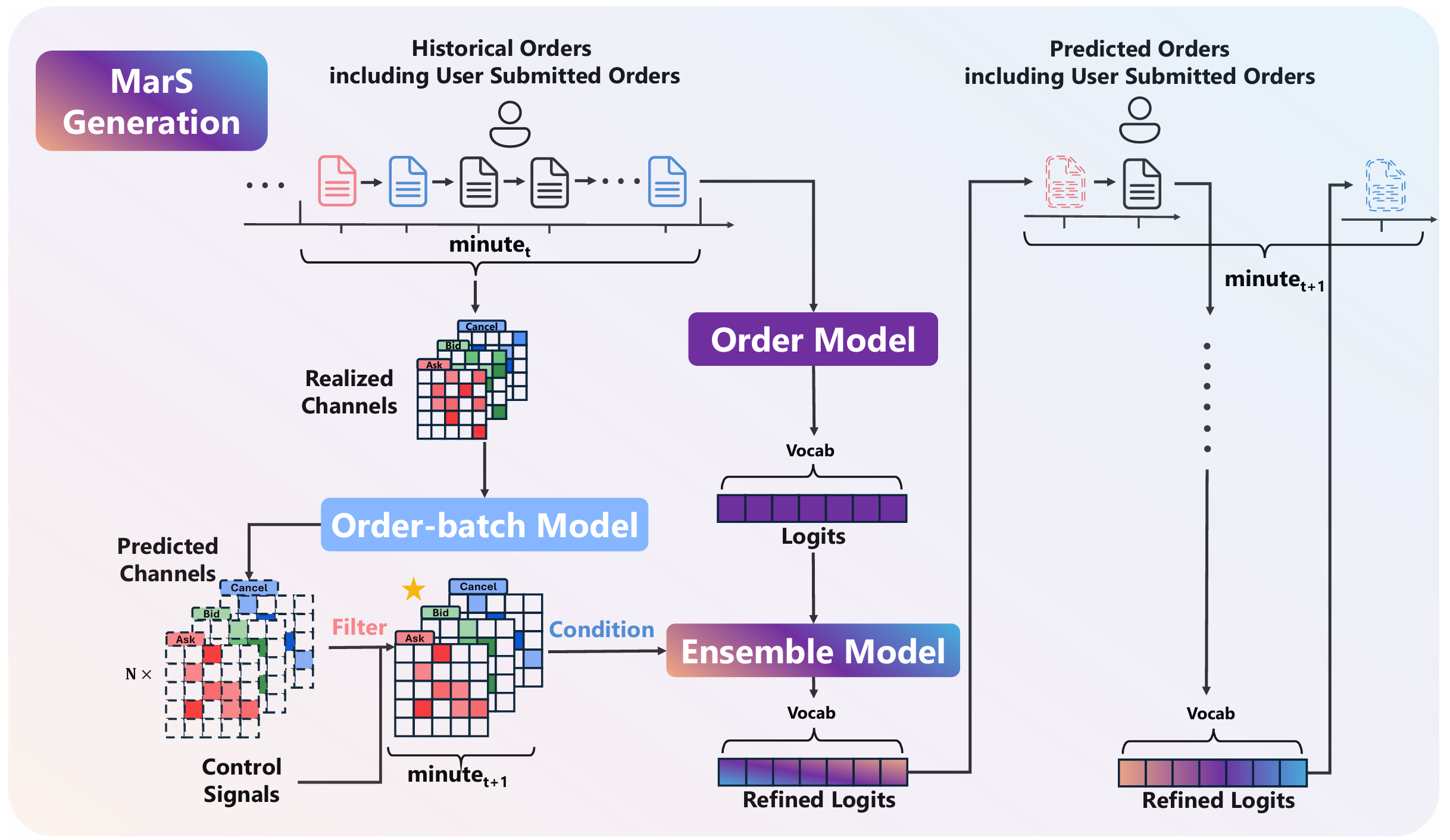

MarS: a Financial Market Simulation Engine Powered by Generative Foundation Model

Junjie Li, Yang Liu, Weiqing Liu, Shikai Fang, Lewen Wang, Chang Xu, Jiang Bian

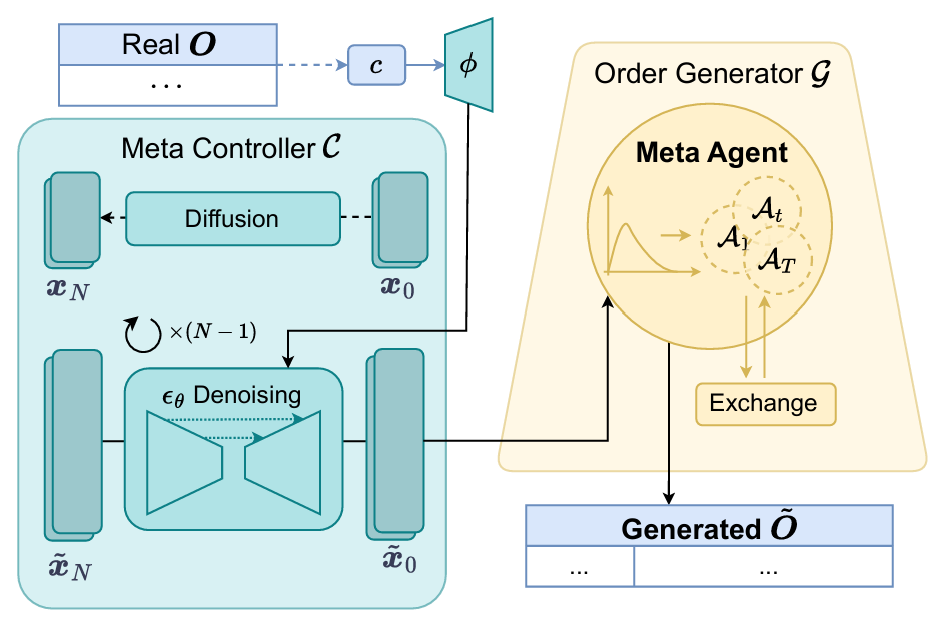

Controllable Financial Market Generation with Diffusion Guided Meta Agent

Yu-Hao Huang, Chang Xu, Yang Liu, Weiqing Liu, Wu-Jun Li, Jiang Bian

Digger-Guider: High-Frequency Factor Extraction for Stock Trend Prediction

Yang Liu, Chang Xu, Min Hou, Weiqing Liu, Jiang Bian, Qi Liu, Tie-Yan Liu

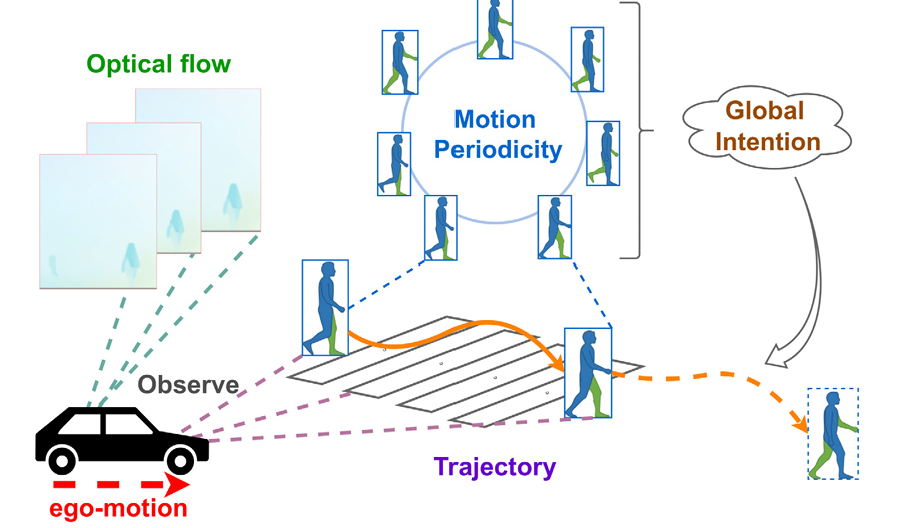

A multimodal stepwise-coordinating framework for pedestrian trajectory prediction

Yijun Wang, Zekun Guo, Chang Xu, Jianxin Lin

MG-TSD: Multi-granularity time series diffusion models with guided learning process

Xinyao Fan, Yueying Wu, Chang Xu, Yuhao Huang, Weiqing Liu, Jiang Bian

Microstructure-Empowered Stock Factor Extraction and Utilization

Xianfeng Jiao, Zizhong Li, Chang Xu, Yang Liu, Weiqing Liu, Jiang Bian

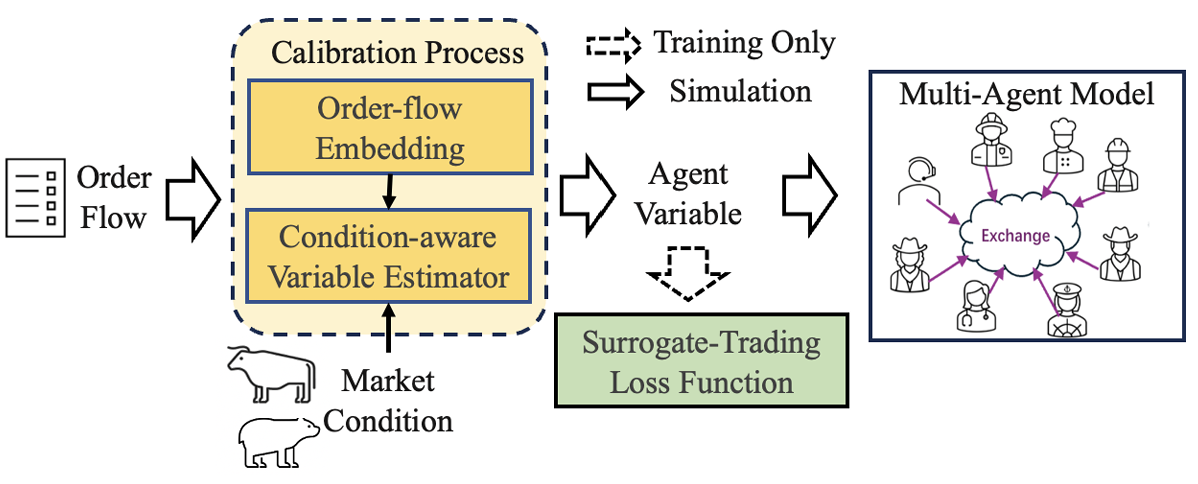

Efficient Behavior-consistent Calibration for Multi-agent Market Simulation

Tianlang He, Keyan Lu, Chang Xu, Yang Liu, Weiqing Liu, S-H Gary Chan, Jiang Bian

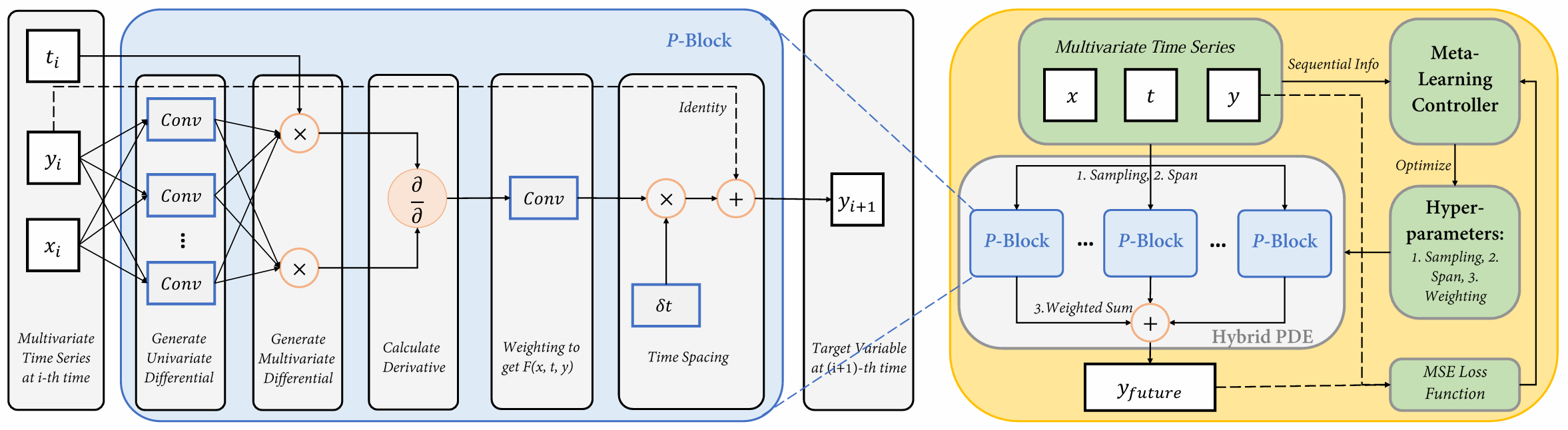

Learning differential operators for interpretable time series modeling

Yingtao Luo, Chang Xu, Yang Liu, Weiqing Liu, Shun Zheng, Jiang Bian

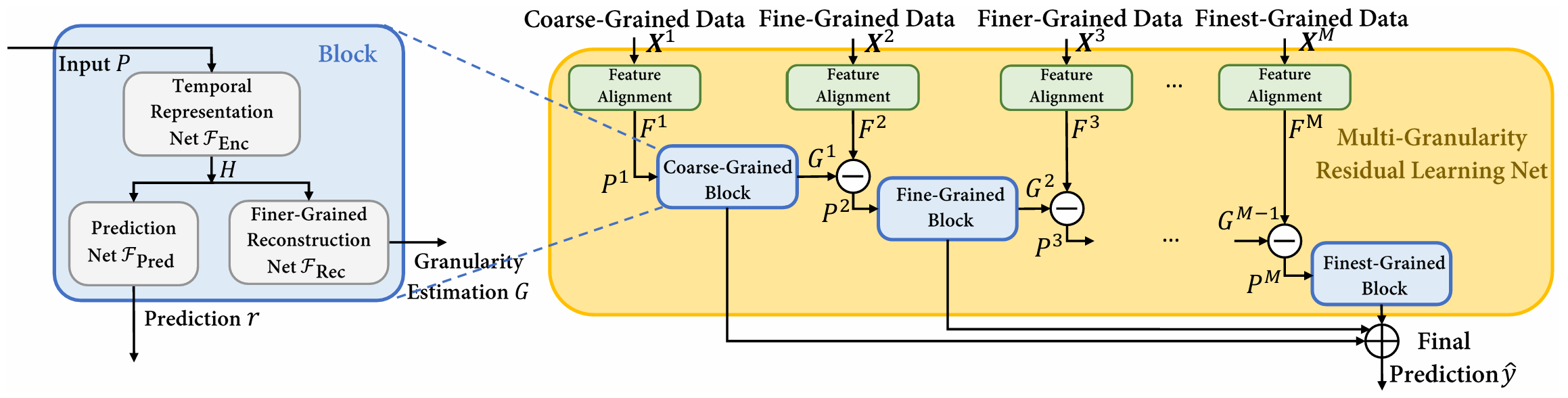

Multi-granularity residual learning with confidence estimation for time series prediction

Min Hou, Chang Xu, Zhi Li, Yang Liu, Weiqing Liu, Enhong Chen, Jiang Bian

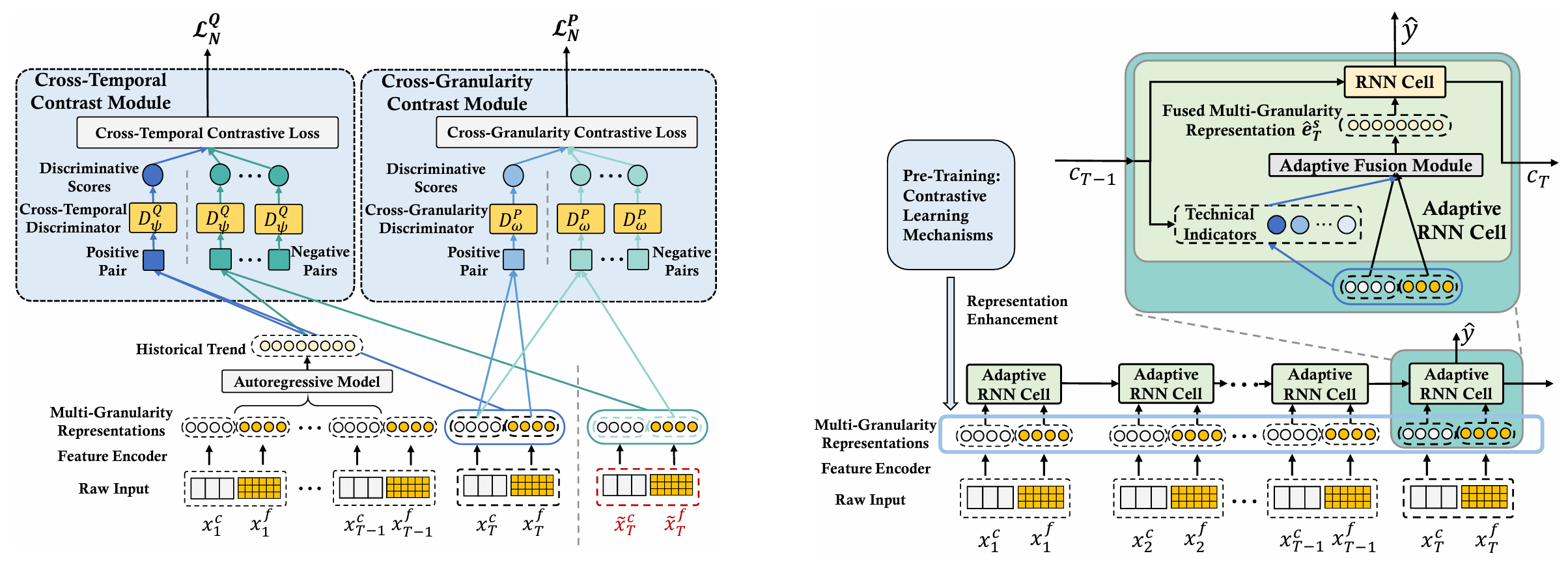

Stock trend prediction with multi-granularity data: A contrastive learning approach with adaptive fusion

Min Hou, Chang Xu, Yang Liu, Weiqing Liu, Jiang Bian, Le Wu, Zhi Li, Enhong Chen, Tie-Yan Liu